In large organisations the deployment of a SIEM platform is a major milestone. For many teams the primary goal is to gain visibility, streamline investigations and improve response speed.

The platform we focus on here is Elastic SIEM, built on the Elastic Stack (Elasticsearch, Logstash, Kibana and Beats). It offers power, scalability and flexibility. Yet even the strongest tool can falter if the implementation lacks structure.

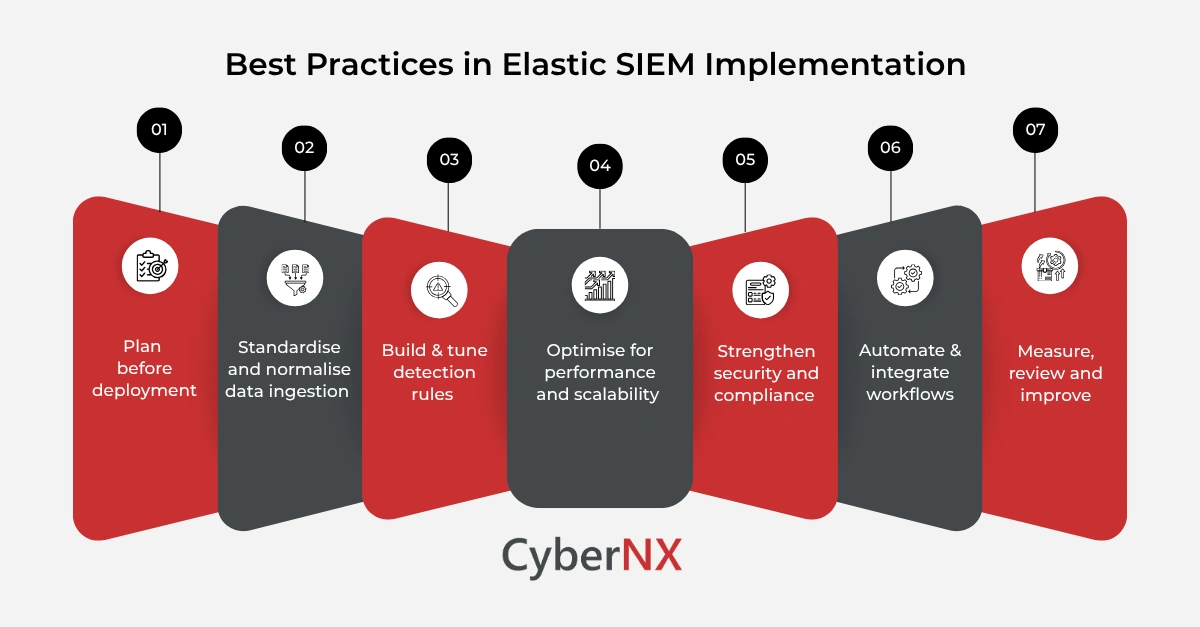

We’ve seen that success lies not in installation alone, but in data quality, detection engineering, search speed, visual correlation and continuous review. In this guide we walk you through Elastic SIEM implementation best practices to help you build a resilient SOC capability.

Plan the architecture before you deploy

A robust architecture is the foundation of a successful Elastic SIEM implementation. Here’s how to approach it.

1. Define your data sources early

Identify firewalls, proxies, endpoints, cloud platforms and authentication systems. Knowing what you ingest helps shape infrastructure and workflows.

2. Estimate volumes and retention

Assess expected data volume, ingestion rate and retention needs. This enables you to size clusters and tier data appropriately.

3. Design tiers for performance and cost efficiency

Use hot, warm and cold data nodes to balance performance and storage cost. A tiered approach helps scalability as your environment grows.

4. Secure the setup

Apply TLS for inter-node and client communications, encryption at rest and role-based access control (RBAC). These controls anchor your security posture within the platform itself.

When architecture is well-planned you avoid bottlenecks, reduce future rework and support growth. We’ve found that a solid start saves effort over time.

Standardise and normalise data ingestion

Data quality and consistency are key. Inconsistent ingestion undermines detection, correlation and investigation.

1. Normalise your data with a schema

Adopt the Elastic Common Schema (ECS) to map fields consistently across log types. Consistent fields let you build rules and dashboards that work reliably.

2. Use enrichment pipelines

Use Logstash or ingest-node pipelines to enrich data with geo-location, threat intelligence tags and user context. Deploy Beats (Filebeat, Winlogbeat, Packetbeat) for endpoint and network data. Elastic offers modules for cloud platforms like AWS, Azure and for network devices.

Standardisation improves correlation, lowers false positives and makes querying simpler. It means your SOC team focuses on threats rather than struggling with data inconsistencies.

Pro Tip: Pick a small set of critical log sources and normalise these first. Then expand gradually. This phased approach reduces early complexity and builds confidence.

Build and tune detection rules

Effective detection is what gives a SIEM its value. For Elastic SIEM this means both leveraging built-in content and customising for your environment.

1. Start with pre-built rules

Elastic ships hundreds of rules mapped to the MITRE ATT&CK framework. These give you baseline coverage.

2. Develop custom rules

Use EQL or KQL to create rules that reflect your business-specific behaviour and threat profile. Thresholds, logic and context matter. Write documentation for each rule: its objective, logic, MITRE mapping and expected result.

3. Tune continuously

Detection engineering is iterative. Monitor false positives, refine logic and adapt thresholds. This reduces alert fatigue and improves efficiency.

Without rule tuning even the best platform generates noise. When you prioritise accuracy, you free your analysts to focus on real threats and improve mean-time-to-detection (MTTD).

Optimise for performance and scalability

As data volumes grow and threat landscapes evolve, performance tuning becomes critical.

1. Use Index Lifecycle Management (ILM)

Implement ILM policies to manage data through hot-warm-cold tiers. This helps control cost and speed for queries.

2. Keep shard sizes balanced

Target shard sizes between approximately 20 – 50 GB for optimal performance. Uneven or oversized shards can slow queries and increase maintenance.

3. Monitor health and plan for spikes

Use monitoring tools (Kibana, Elastic APM) to track latency and cluster health. For ingestion spikes prefer scaling out data nodes rather than overloading existing hardware.

Without performance tuning you risk slow searches, analyst frustration and missed detections. A well-tuned platform supports rapid investigation and reliable response.

Strengthen security and compliance

Since the SIEM becomes a central repository for your security logs, it must be treated as a high value target and governed accordingly.

1. Secure your stack

Enable TLS for all node communications, secure Kibana with HTTPS and implement least privileged access models (RBAC). Use enterprise authentication (SAML, OpenID Connect) if possible.

2. Audit and compliance readiness

Enable audit logging to record administration, rule changes and user access. Align your SIEM configuration with regulatory frameworks like PCI DSS, GDPR, ISO 27001, as well as regional mandates.

If your SIEM is compromised, you risk undermining your full security posture. You also need to demonstrate governance, traceability and compliance in audits.

Automate and integrate response workflows

Detection is only half the story. A full-fledged SOC connects detection to response.

1. Use built-in workflows

The Elastic Security app includes case management, detection engine and alerting connectors. These support incident workflows end to end.

2. Integrate with external tools

Connect alerts to platforms like ServiceNow, Slack or email. Use APIs to automate routine operations such as rule updates or enrichment tasks. This lowers manual effort and speeds containment.

Automation frees your analysts from repetitive tasks. It accelerates response. It helps you scale without needing equivalent head-count growth.

Measure, review and continuously improve

A SIEM implementation is not a one-time project. It must evolve with threats, business changes and technology shifts.

1. Track key metrics

Measure dwell time, false-positive rates, mean-time-to-respond (MTTR) and rule performance. Build dashboards to visualise SOC metrics and health.

2. Conduct periodic reviews

Quarterly audits of data sources, rules accuracy, and retention policies help ensure you stay aligned with business and threat changes. Staying current with the latest Elastic releases is also important.

If you neglect continuous improvement, you risk stagnation. Threats evolve. So should your detection and response capability.

Conclusion

Implementing Elastic SIEM successfully means more than software installation. It means building a data-driven, automated and resilient SOC function. By following these Elastic SIEM implementation best practices, you can turn Elastic SIEM into a powerful detection & response platform. We, at CyberNX, partner with businesses to implement exactly that kind of approach. If you’d like support in designing, deploying or refining your Elastic SIEM, we’re here to help. Connect with us today for Elastic Stack Consulting Services and take your SOC to the next level.

Elastic SIEM implementation best practices FAQs

How many data sources should we onboard first into Elastic SIEM?

You should start with the most critical sources – such as endpoint logs, firewall traffic, authentication events – and onboard others iteratively. A phased approach reduces complexity and builds momentum.

What retention period is recommended for SIEM logs in Elastic?

Retention varies by business and regulatory requirements. A common pattern is 90 days in hot tier, followed by warm/cold tiers for older data. The key is balancing cost, performance and compliance.

How often should detection rules be reviewed and tuned?

Ideally you should review at least quarterly. However, if you deploy new business systems or threat-vectors emerge, rule reviews may be needed more frequently to keep ahead of risk.

Can Elastic SIEM scale to large enterprise volumes?

Yes – Elastic SIEM is designed to scale horizontally. With proper architecture (tiered nodes, balanced shards, monitoring) you can support large volumes of data and high query loads.