AI adoption is accelerating across enterprises. Models are embedded in customer service, security operations, analytics and decision-making workflows. Yet many security leaders still lack clear visibility into how these AI systems are built, trained and maintained.

This is where AI Bill of Materials (AIBOM) becomes critical.

An AIBOM brings structure, clarity and accountability to AI environments that often feel opaque. For CISOs and technology leaders, it helps answer uncomfortable questions. What models are we using? Where did the data come from? Who maintains them? And what risks are hiding inside third-party AI components?

In this AIBOM guide, we explain what an AI Bill of Materials really means, why it matters now, and how organisations can adopt it without slowing innovation. We also share practical insights based on what we see across real enterprise environments.

What is AIBOM?

AIBOM stands for AI Bill of Materials. At its core, it is a structured inventory of all components that make up an AI system. This includes models, datasets, algorithms, libraries, frameworks, dependencies and update histories.

Think of AIBOM as the AI equivalent of a software bill of materials, but with deeper implications. AI systems do not just execute code. They learn from data, evolve over time and influence critical decisions.

The rise of regulations, supply chain attacks and AI misuse has pushed transparency from a theoretical ideal into a business requirement. Security leaders need evidence. Regulators demand traceability. Customers expect responsible AI.

An AIBOM helps meet all three.

The hidden risks inside modern AI systems

AI systems rarely exist in isolation. They are built using open-source libraries, pre-trained models, cloud services and third-party APIs. Each layer introduces risk.

Data provenance is often unclear. Training datasets may include biased, outdated or unlicensed information. Model updates can occur silently, changing behaviour without formal review. Dependencies can introduce vulnerabilities inherited from upstream providers.

Our experience shows that many organisations cannot fully explain how their AI systems work, even when those systems support critical operations.

Without AIBOM, these risks remain invisible.

How AIBOM differs from traditional SBOM

While SBOM focuses on software components, AIBOM goes further.

It captures dynamic elements such as training data sources, model versions, fine-tuning processes and inference pipelines. It also documents ethical, legal and security considerations linked to AI behaviour.

This distinction matters. A vulnerability in a dataset or model architecture can be just as damaging as a flaw in code. AI Bill of Materials acknowledges this reality.

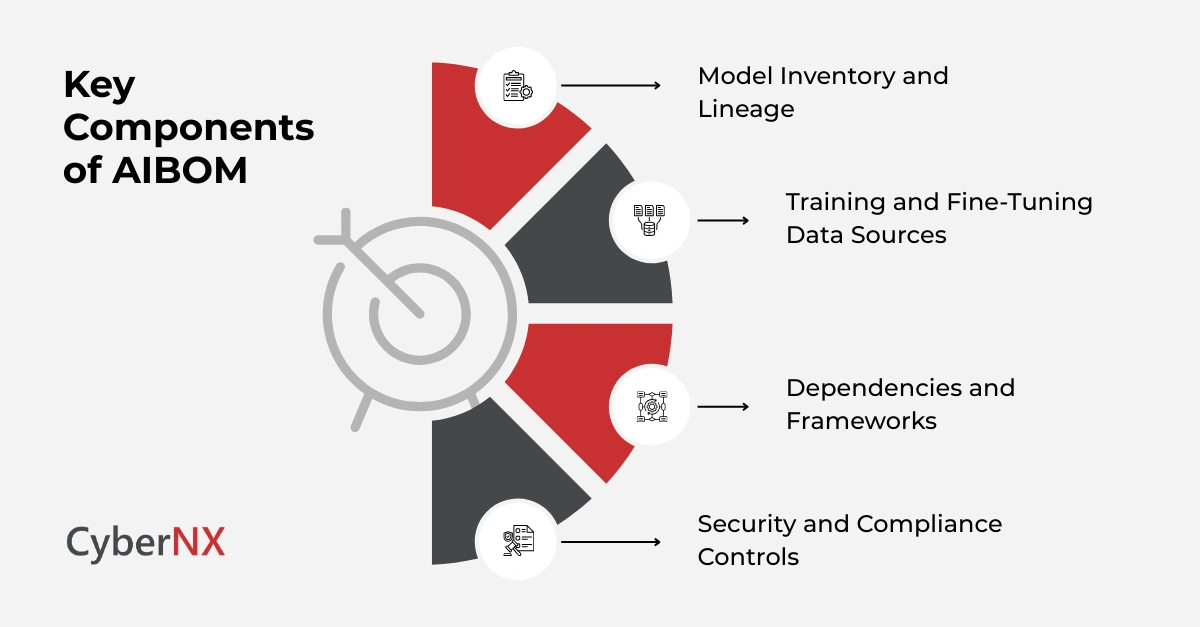

Core components of an effective AI Bill of Materials

An AIBOM is not a static document. It is a living system of record that evolves with your AI stack.

1. Model inventory and lineage

This section documents all models in use, including version history, ownership and deployment context. It also records whether models are developed internally or sourced externally.

Clear lineage enables faster incident response and accountability when models behave unexpectedly.

2. Training and fine-tuning data sources

Data transparency is central to AIBOM. Leaders need to know where data originates, how it is curated and whether it complies with legal and ethical standards.

This reduces exposure to bias, data leakage and regulatory penalties.

3. Dependencies and frameworks

AI systems rely on libraries, frameworks and cloud services. AIBOM tracks these dependencies and their update cycles.

This visibility supports proactive vulnerability management and patching.

4. Security and compliance controls

A strong AIBOM includes security testing results, access controls and compliance mappings. It shows how AI components align with internal policies and external regulations.

This is increasingly important as AI governance frameworks mature.

Why CISOs should prioritise AIBOM adoption

Security leaders are accountable for risks they cannot always see. AI Bill of Materials changes that balance.

It enables informed risk decisions rather than reactive controls. It supports board-level conversations with evidence rather than assumptions. And it creates a common language between security, data science and engineering teams.

According to Gartner, organisations that implement structured AI governance are more likely to scale AI safely and sustainably. AIBOM is a foundational part of that governance.

AIBOM and regulatory readiness

Regulators worldwide are tightening expectations around AI transparency and accountability. Frameworks such as the EU AI Act emphasise traceability, risk management and documentation.

An AI Bill of Materials directly supports these requirements. It provides auditable records of AI components and their evolution over time.

Rather than scrambling to assemble documentation during audits, organisations with AIBOM are prepared by design.

Operational benefits beyond compliance

AI Bill of Materials is not just about risk avoidance. It delivers operational value too.

Teams gain faster onboarding and knowledge transfer. Incident response becomes more precise. AI updates are managed with greater confidence. Most importantly, AIBOM builds trust. Internal stakeholders trust systems they understand. Customers trust organisations that can explain how AI decisions are made.

Common challenges when implementing AIBOM

Adopting AIBOM is not without obstacles.

Many organisations struggle with ownership boundaries between security, data science and engineering. Tooling may be fragmented. Documentation practices are often inconsistent.

The key is to start small. Focus on high-impact AI systems first. Build repeatable processes. Integrate AIBOM into existing security and governance workflows rather than creating parallel structures.

Best practices for building AIBOM at scale

Successful AIBOM programmes share a few common traits.

They treat AI Bill of Materials as a process, not a document. Automation is used where possible, but human oversight remains essential. Governance frameworks are aligned with business objectives, not imposed in isolation.

We also see better outcomes when AIBOM initiatives are framed as enablers of innovation rather than blockers.

Conclusion

Many enterprises lack the internal capacity to design and operationalise AIBOM alone. External partners can accelerate maturity by bringing proven frameworks, tooling expertise and regulatory insight.

AI is becoming foundational to enterprise operations. With that comes responsibility.

This AIBOM guide shows that transparency, trust and innovation are not opposing goals. When implemented thoughtfully, AI Bill of Materials strengthens security while enabling confident AI adoption.

Security leaders who act now will be better prepared for regulatory scrutiny, supply chain risks and the next wave of AI-driven change.

If you are exploring how to implement an AI Bill of Materials or strengthen AI governance, we are here to help. A short conversation can clarify next steps and avoid costly missteps.

Ready to bring structure and confidence to your AI security strategy? Speak with us to explore how our in-house SBOM management tool can help your security and operational needs. Plus, find out how AIBOM can be integrated into your existing security and governance frameworks.

AIBOM FAQs

How often should an AIBOM be updated?

An AI Bill of Materials should be updated whenever models, data sources or dependencies change. Continuous updates are ideal for high-risk systems.

Does AIBOM slow down AI development?

When implemented well, AIBOM improves clarity and reduces rework. It supports faster, safer innovation rather than slowing teams down.

Can AIBOM be automated?

Parts of AIBOM can be automated, especially dependency tracking and version control. Human oversight remains critical for data and ethical considerations.

Is AIBOM relevant for small AI deployments?

Yes. Even limited AI use can introduce risk. A lightweight AIBOM helps establish good practices early.