Artificial intelligence is moving beyond systems that respond to prompts. A new class of systems now plans tasks, makes decisions, and takes action with minimal human input. These agentic AI systems are being embedded into enterprise workflows to automate financial decisions, manage infrastructure, coordinate operations, and support security teams.

The benefits are clear. So are the risks.

Agentic AI security is not about protecting static models or filtering inputs. It is about controlling autonomous systems that operate with delegated authority and privileged access. These systems behave like digital insiders. They act continuously, execute actions at machine speed, and can cause real-world impact before humans are aware something has gone wrong.

With more than half of organisations already experimenting with AI agents, the risks are no longer theoretical. Security leaders must address them now.

What makes agentic AI a security turning point

Traditional AI systems are reactive. They receive an input, produce an output, and stop. Agentic systems operate differently. They maintain state, plan multi-step actions, invoke tools, interact with other agents, and adapt based on outcomes.

In practice, they resemble employees more than applications. The difference is scale and speed. Agents can act faster than people, operate continuously, and replicate instantly. They do not apply judgement, context, or restraint unless explicitly designed to do so.

This shift changes the core security question. It is no longer just about who can access a system. It is about what an autonomous system is allowed to decide, execute, and influence on its own.

Enterprise spending on AI systems continues to rise, with agent-based architectures becoming a common foundation. Yet most security controls were designed for human users or predictable applications. They were not built for non-human actors with delegated authority operating across multiple environments.

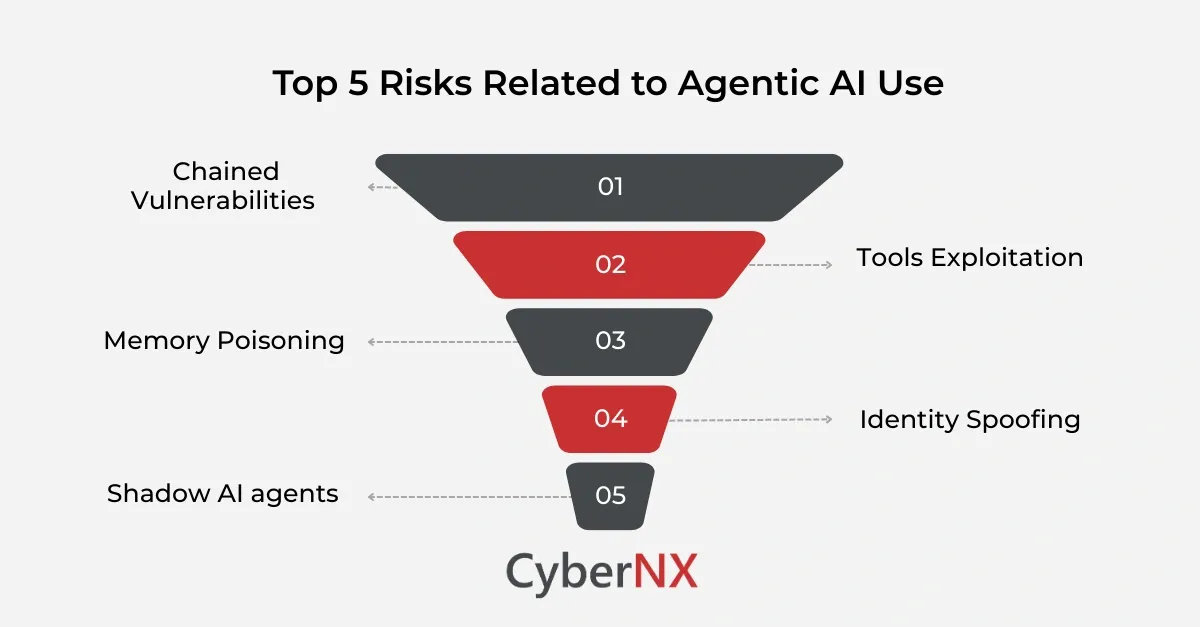

The new threat landscape introduced by agentic AI

Autonomy introduces failure modes that traditional controls struggle to detect or contain. As agentic systems move into production, several risk patterns are becoming clear.

1. Chained vulnerabilities and cascading errors / Chained vulnerabilities

Agentic workflows often link multiple systems together. One agent’s output becomes another agent’s input. A single flawed decision can propagate across an entire process.

For example, an agent that misclassifies customer risk could trigger downstream approvals, bypass compliance checks, or expose the organisation financially. No account is compromised. No alert fires. The damage results from trusted automation doing the wrong thing at scale.

2. Tool misuse and API exploitation/ Tools exploitation

Agents are often authorised to call APIs, run scripts, modify configurations, or interact with cloud services. This power can be misused if an attacker manipulates the agent’s inputs or context.

Through indirect influence, an agent can be coerced into using legitimate tools in harmful ways. It may weaken security controls, expose data, or create persistence mechanisms, all while appearing to operate normally.

3. Memory poisoning

Many agentic systems rely on persistent memory to improve performance. If that memory is corrupted, future decisions can be influenced over time.

This creates a quiet, long-lived attack path. The system continues to function, but its behaviour slowly shifts. Traditional monitoring struggles to detect this kind of manipulation because there is no single triggering event.

4. Identity spoofing and synthetic agents/ Identity spoofing

In distributed environments, agents rely on identity to communicate and coordinate. If those identities are forged or hijacked, attackers can insert themselves directly into trusted workflows.

Once inside, a synthetic agent can access data or influence decisions while appearing legitimate. The risk is amplified when identity controls are weak or inconsistent across environments.

5. Shadow AI agents

The ease of deploying agents has led to unsanctioned systems operating outside formal governance. These agents often have excessive permissions, limited logging, and no clear ownership.

They create blind spots. Attackers do not need to break in. They only need to find what was never secured in the first place.

Agentic identity: treating agents as security principals

A central requirement of agentic AI security is recognising that agents need their own identities. Human-centric identity models are not sufficient.

Agents must be treated as first-class security principals, governed with the same discipline applied to privileged users.

1. Least privilege by design

Each agent should have access only to what it needs to perform its task. Broad or persistent permissions dramatically increase impact when behaviour deviates from expectations.

2. Just-in-time credentials

Static service accounts are poorly suited to autonomous systems. Agents should receive short-lived credentials that expire automatically once a task completes.

This limits exposure and reduces the value of compromised credentials.

3. Identity orchestration across environments

Agentic workflows span SaaS platforms, cloud infrastructure, and on-premise systems. Identity controls must remain consistent across these boundaries.

Without orchestration, gaps appear. Those gaps are where misuse and abuse occur.

This approach builds on zero trust principles, extended to non-human actors that operate continuously.

Securing the agent loop, not just the perimeter

Perimeter controls alone are insufficient. Security must be embedded within the agent’s decision lifecycle.

1. Reasoning and planning controls

Clear boundaries must exist around acceptable goals and actions. Agents should not be free to expand plans indefinitely or redefine objectives.

Changes in reasoning patterns often signal manipulation or misconfiguration. Detecting these shifts early is critical.

2. Tool execution guardrails

Every tool invocation should be authorised explicitly and executed in controlled environments. Actions with irreversible impact should require human approval.

Automation should reduce workload, not remove accountability.

3. Memory as a protected surface

Agent memory must be treated as sensitive data. Writes should be controlled and monitored. Different memory types should be isolated to limit long-term influence.

Without protection, memory becomes an attack surface rather than an optimisation.

4. Secure agent-to-agent communication

In multi-agent systems, communication directly drives outcomes. Messages must be authenticated, validated, and monitored.

Unchecked communication paths allow manipulation to spread silently across the system.

Governance: preparing the organisation for autonomous risk

Technical controls alone are not enough. Governance must adapt to autonomy.

Clear ownership of agent behaviour is essential. Escalation paths must exist when agents act outside expectations. Central visibility into all AI deployments, including experimental ones, is non-negotiable.

Regular reviews help detect goal drift. Over time, agents may diverge from their intended purpose due to environmental changes, corrupted memory, or poorly defined objectives. Without review, this drift goes unnoticed.

A 90-day path to agentic AI security maturity

Organisations can make progress quickly with a phased approach.

Days 1 to 30: discovery and containment

Inventory all agents, their permissions, and data access. Remove static credentials. Enforce least-privilege identities.

Days 31 to 60: guardrails and enforcement

Introduce policy-based controls, isolate tool execution, and require human approval for high-impact actions.

Days 61 to 90: readiness and response

Implement security posture management for agent exposure. Create incident response playbooks specific to agent failures, including credential revocation and memory resets.

Visibility and traceability at machine speed

Agents act faster than humans can respond. Security depends on continuous visibility across the full decision loop.

Traceability must capture reasoning steps, state changes, tool usage, and decision triggers, not just outputs. Low-level telemetry can surface abnormal behaviour in real time. Security graphs help identify dangerous combinations of privilege and exposure before they are exploited. This visibility is essential for audits, investigations, and accountability.

Conclusion

Agentic AI changes how organisations operate. It also changes how security must be designed.

Trust cannot be assumed. It must be engineered through identity controls, embedded safeguards, governance, and continuous visibility.

Organisations that treat agents as security principals, secure internal decision paths, and maintain oversight will benefit from automation without losing control. Those that do not will learn the hard way that autonomy without governance creates risk at scale.

In environments where machines can act faster than people, security is no longer about blocking access. It is about governing behaviour.

Contact our experts at CyberNX to know more about Agentic AI security.

Agentic AI FAQs

How does agentic AI security differ from traditional application security?

Traditional application security focuses on protecting code, infrastructure, and user access. Agentic AI security focuses on controlling behaviour. The risk is not just exploitation, but autonomous systems making harmful decisions using legitimate access. This requires identity controls, behavioural monitoring, and governance that go beyond standard application security practices.

Who is accountable when an autonomous agent causes a security incident?

Accountability remains with the organisation. Autonomous agents do not remove responsibility. Clear ownership must be defined for each agent, including who approves its permissions, who reviews its actions, and who responds when it behaves unexpectedly. Without defined ownership, incident response becomes slow and ineffective.

Can existing IAM and PAM tools secure AI agents effectively?

Partially. Existing IAM and PAM tools provide a foundation, but they were designed for humans and static service accounts. AI agents require short-lived credentials, continuous policy evaluation, and visibility into decision-driven actions. Most organisations need to extend or adapt their identity stack to meet these requirements.

How should organisations test the security of agentic AI systems?

Testing should include scenario-based simulations, not just technical scans. Organisations should test how agents behave under ambiguous inputs, partial failures, and conflicting objectives. Regular reviews of decision logs, memory state, and tool usage help identify drift, misuse, or hidden dependencies before incidents occur.